Rate Limiting in Distributed System

Managing traffic and preventing system overload

Rate-limiting, or throttling, is a mechanism that rejects a request when a specific quota is exceeded. A technique to control how many requests a client can make to a service over a given time window. When a client exceeds their quota, subsequent requests are rejected or delayed.

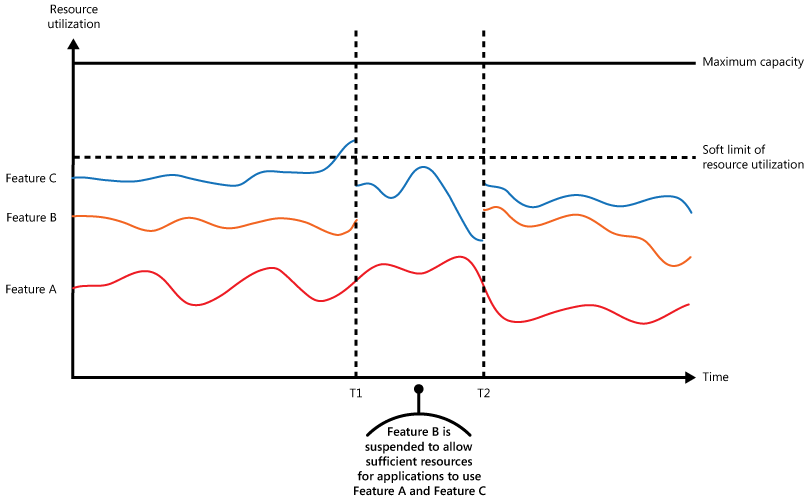

Throttling Pattern and Rate Limiting Pattern as key strategies for protecting services against overuse and abuse. It ensures fair resource allocation, and maintaining system stability under varying load conditions.

What is Rate Limiting?

Rate limiting defines quotas on resource usage, typically measured by:

Number of requests (e.g., 100 requests per minute)

Data volume (e.g., 1MB per second)

Concurrency (e.g., 10 parallel connections)

If a service allows 10 requests per second per API key, and a particular key makes 12 requests, then 2 requests will be rejected on average.

Why Implement Rate Limiting?

It helps keep systems fair and available for everyone from single user or a bot that throttles up the requests. By controlling how many requests a user or bot can make, it prevents any single source from overwhelming the service. This ensures that all users get a fair chance to access resources. It also adds a layer of security by blocking brute-force attacks and bot-nets that try to exploit the system through excessive or malicious requests.

They use brute-force algorithms to attack the system using a single machine or even multiple machines that constitute a network of bots known as a bot-net.

Types of Rate Limiting

Rate limiting can be broadly categorized in two types: Single process rate limiting, and distributed rate limiting. We will start with a single-process implementation first and then extend it to a distributed one.

Single-Process Rate Limiting

Tracking Timestamps

We could store a list of timestamps for each API key and periodically clean out any that are older than the quota interval (e.g., 1 minute). But as the number of requests grows, this approach becomes memory intensive.

Memory-Efficient Alternative: Bucketing

A more scalable approach is to divide time into fixed intervals (buckets) such as one-minute buckets and use counters instead of raw timestamps.

How It Works:

Each incoming request is mapped to a time bucket based on its timestamp.

The corresponding bucket’s counter is incremented.

Only the relevant bucket windows are retained.

Example:

If a request arrives at 12:00:18, it is counted under the bucket for 12:00.

This method compresses request information efficiently, using constant space per API key, regardless of the number of requests.

Now, with this memory-efficient setup, how can we enforce rate limits?

Enforcing Limits with a Sliding Window

We can implement rate limiting using a sliding window that moves across buckets in real time, tracking the requests within it. The window's length aligns with the quota time unit, like 1 minute. However, because the sliding window can overlap with multiple buckets, we must calculate a weighted sum of bucket counters to determine the requests within the window.

To compute this:

Calculate a weighted sum of overlapping bucket counters.

Weigh each bucket based on how much of it falls within the sliding window.

This approximation improves with smaller buckets (e.g., 10-second intervals).

Distributed Rate Limiting

Things get tricky in distributed systems with multiple servers, especially across regions. If each server has its own rate limiter, it can lead to two main issues:

Inconsistent limits

Race Conditions

When multiple processes handle requests, local rate limiting isn’t enough. We need shared state to coordinate limits globally across nodes. A shared data store is needed to track total requests per API key.

Shared Data Store Approach

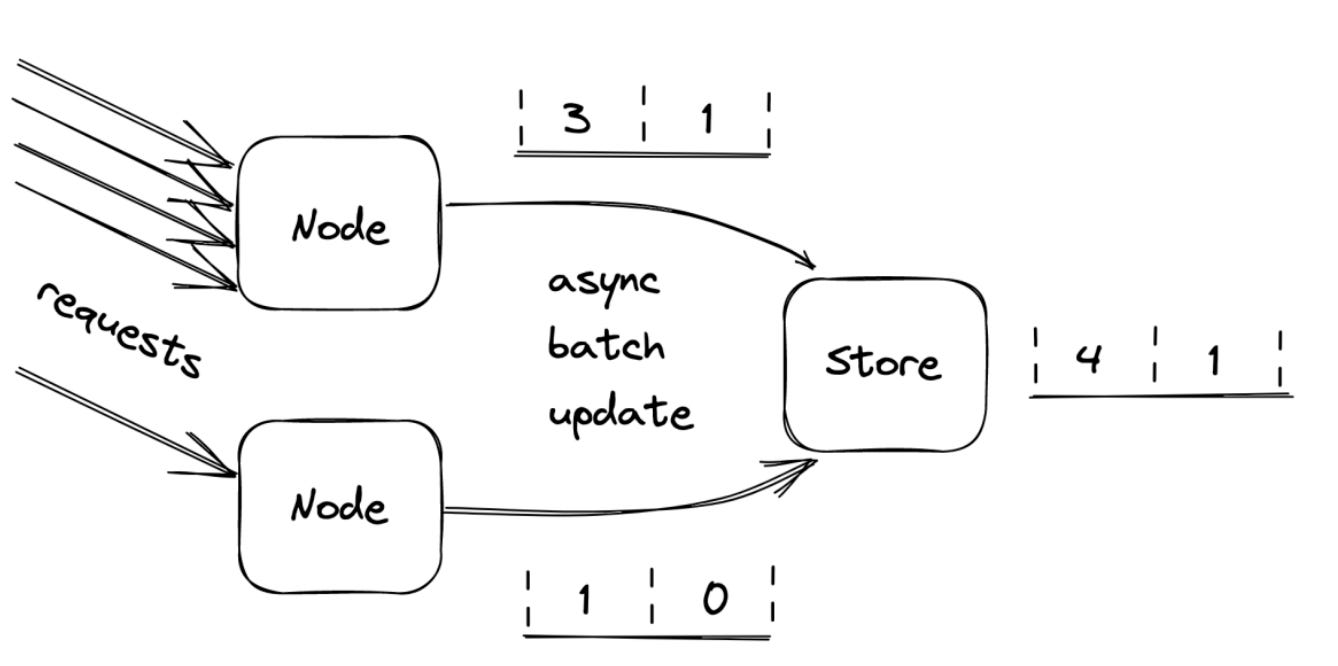

A central data store (e.g., Redis, DynamoDB, Memcached) can store counters for each API key and bucket.

Store two counters per API key (current and previous buckets).

Each request updates the counter via atomic operations like

INCR.

Challenges & Optimizations

Concurrency Issues

Simultaneous updates from multiple nodes can lead to race conditions. Using transactions solves this but is slow and resource-heavy. Atomic operations might be a solution (e.g., INCR, GETSET, COMPARE-AND-SWAP) to safely update counters.

Race Condition Example:

Performance Bottlenecks

Writing to the shared store on every request introduces latency and load.

Solution:

Batch updates in memory.

Periodically flush them asynchronously to the shared store.

Servers batch bucket updates in memory for some time, and flush them asynchronously to the data store at the end of it. This significantly reduces write frequency and improves throughput.

Store Downtime

What if the central data store becomes unavailable?

Enter the CAP theorem: you must choose between consistency and availability during network faults.

Safer fallback:

Continue to serve requests using the last known state from the data store.

Avoid outright rejections due to temporary unreachability — especially if the alternative is degraded business operations.

This compromise favors availability over strict consistency, which is often acceptable in real-world systems.

Rate Limiting Considerations

Are you safely hooking rate limiters into your middleware stack?

Make sure failures (like bugs or Redis downtime) don’t break your API—catch exceptions and let requests pass through if needed.

Are you showing clear rate limit errors to users?

Choose between HTTP 429 or 503 based on context, and return clear, actionable messages.

Can you safely turn off the rate limiters if necessary?

Use feature flags as escape valves and set up alerts to monitor how often limiters trigger.

Did you test each rate limiter in dark mode to see the impact?

Ensure your limits keep the API stable without disrupting users. You might need to collaborate with users to adjust their usage patterns.

When Not to Use Rate Limiting?

Rate limiting might not be necessary for internal services within a fully trusted environment where all clients are known, controlled, and operate at predictable loads. In such cases, adding rate limiting could introduce unnecessary complexity and latency without much benefit.

Conclusion

Rate limiting and throttling help protect systems from overuse. They make sure users get fair access. In distributed systems, things get more complex. Issues like race conditions and latency can happen. Using buckets, sliding windows, and atomic updates helps. Batching and async writes reduce load. These tools keep systems fast and reliable.

Rate limiting isn’t about saying ‘no’. It’s about saying ‘not now’ to ensure ‘yes’ is always available later.

𝗜𝗻𝘀𝗽𝗶𝗿𝗮𝘁𝗶𝗼𝗻𝘀 𝗮𝗻𝗱 𝗥𝗲𝗳𝗲𝗿𝗲𝗻𝗰𝗲𝘀

Rate Limiting Pattern, Microsoft

Understanding Distributed Systems by Roberto Vitillo